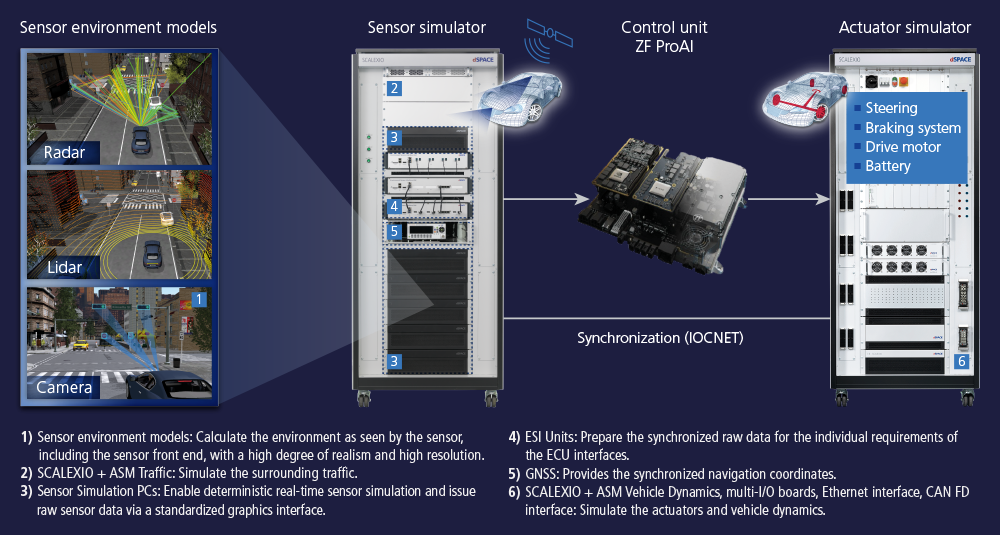

The exciting new challenge developers faced was how to validate a vehicle whose control system makes independent decisions. ZF has met this challenge by combining traditional HIL technology with a sensor-realistic environment simulation. The test system designed for this purpose is based on the dSPACE tool chain.

Getting a conventional driver-operated vehicle with a high level of quality and comfort ready for the road can only be done by expending considerable time and effort during the development and validation phases, especially because cost and time targets always have to be met. Introducing autonomous transport systems to the road brings quality, efficiency and safety requirements to completely new levels. The resulting complexity in the development phase can be overcome only by using particularly lean methods and tool chains. After all, it is not only about successfully introducing autonomous functions to the road, but also of being able to apply them safely under any weather, traffic or visibility conditions.

Autonomous Technology Platform

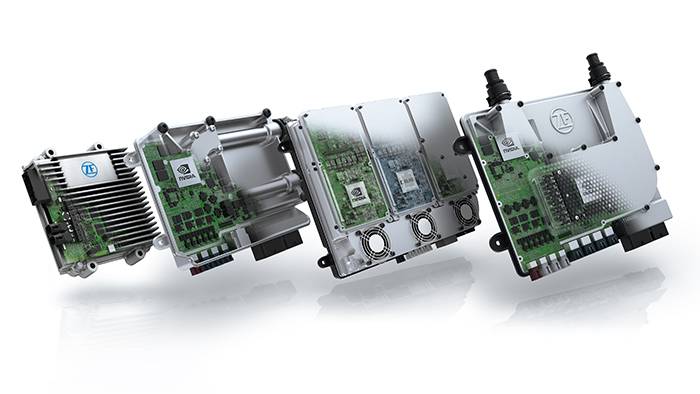

ZF is driving the development of a technology platform designed for an autonomous, battery-powered people mover. This feat highlights ZF’s extensive expertise as a system architect for autonomous driving. To this end, the technology group uses its sophisticated network of experts – especially to determine and process environment and sensor data. The project also demonstrates the performance and practicality of the ZF ProAI supercomputer, which was introduced by ZF and NVIDIA only a year ago. The computer acts as the central control unit in the vehicle. The goal is a system architecture that is scalable and can be transferred to any vehicle, depending on the intended use, available hardware equipment and desired level of automation.

Design of the Autonomous System

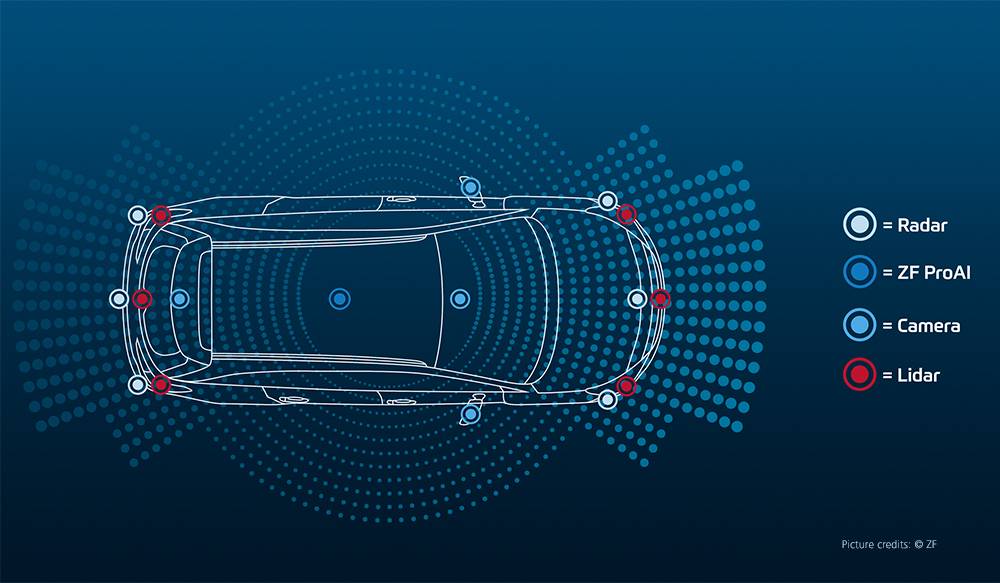

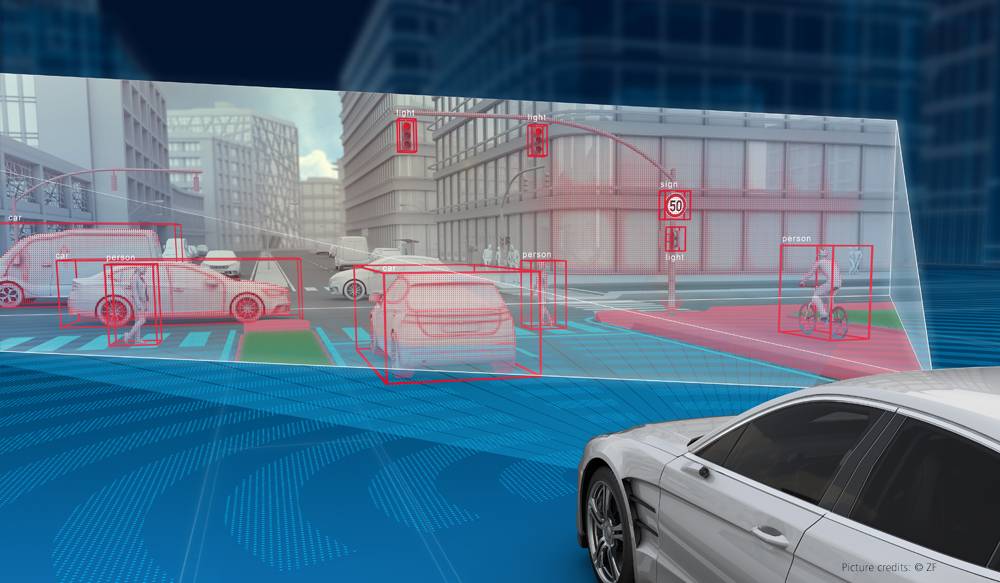

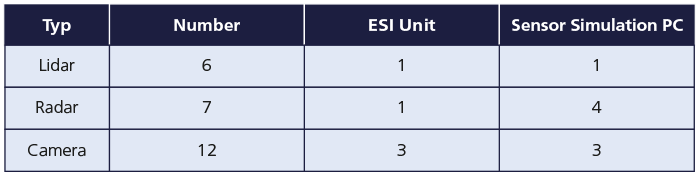

The vehicle is equipped with six lidar sensors, seven radar sensors, and twelve camera sensors for environment detection. A global navigation satellite system (GNSS) ensures that the exact location is determined. All sensor data is combined in the ZF ProAI central control unit. The control unit preprocesses and evaluates the data using the typical steps of perception, object identification and data fusion. The driving strategy is calculated as well. Derived from the above, control signals for the actuators (steering, driveline, and brake system) are generated. Algorithms that are partly based on artificial intelligence (AI) analyze the sensor data. Above all, the AI software accelerates data analysis and improves the precision of object recognition. The aim is to use the wealth of data to identify recurring patterns in traffic situations, such as a pedestrian crossing the road.

Validation Concept

An important validation step for electronic control units (ECUs) is the integration test, which involves testing an ECU combined with all sensors, actuators, and the electrics/electronics (E/E) architecture of the vehicle. This comprehensive view is important to fully validate all driving functions, including the involved components (sensors, actuators), and to evaluate the vehicle behavior. Hardware-in-the-loop (HIL) simulation is an established method for integration tests. Therefore, the development project contains a validation step that uses a HIL test solution.

Artificial Intelligence

Artificial Intelligence (AI) is a branch of computer science that addresses the automation of intelligent behavior and machine learning. In general, artificial intelligence is the attempt to reproduce certain human decision-making structures by building and programming a computer in such a way that it can work on problems relatively independently.

HIL Simulator Concept

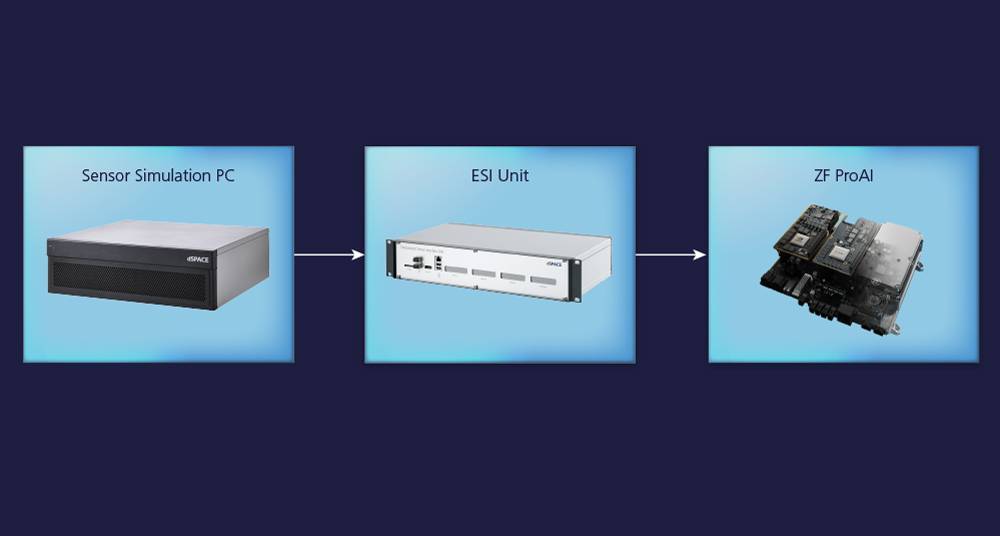

ZF worked with dSPACE to develop the concept for the HIL simulator. The simulator is based on SCALEXIO technology and used to simulate the en- tire vehicle, including steering, brakes, electric drive, vehicle dynamics, and all sensors. As an ECU input, the simu- lator transmits all sensor signals. On the output side, it provides a restbus simulation as well as the I/O required for HIL operation of the vehicle actu-ators. To ensure a realistic simulation, the dSPACE Automotive Simulation Model (ASM) tool suite is used to calculate the vehicle and the vehicle dynamics – for the sensors and the vehicle in real time. This poses a particular challenge because AI systems themselves do not have any “hard” real-time properties and linear dependencies. The AI control unit is therefore “inserted” between the synchronized simulators for the sensors and actuators and thus operated exactly as under real conditions in the vehicle.

Sensor Emulation

The ZF ProAI control unit is designed to primarily process all raw sensor data directly. In addition, the sensor data is also read in as object lists. The object lists are provided by the ASM Traffic Model as part of a ground truth simulation of the surrounding traffic. For the raw data, all sensors must be emulated as realistically as possible.

Highly Accurate Sensor Environment Simulation

The generation of raw sensor data requires models that calculate the sensor environment based on defined test scenarios and simulate it with physical accuracy. The physical radar, lidar and camera models from the dSPACE tool chain are used for this purpose. These high-precision and high-resolution models calculate the transmission path between the environment and the sensor, including the sensor front end. The ray tracer of the radar and lidar models renders the entire transmission path from the transmitter to the receiver unit and supports multipath propagation. Several million beams are emitted in parallel. The exact number depends on the respective 3-D scene. In both models, the reflection and diffusion of complex objects are calculated based on physical behavior. It is also possible to specify the number of ‘hops’ for multipath propagation. The lidar model is designed for both flash and scanning sensors. The camera model can accommodate different lens types and optical effects, such as chromatic aberration or dirt on the lens. Since all models are extremely complex, model components must be calculated on graphics processing units (GPUs) to meet the real-time demands. The NVIDIA P6000-equipped Sensor Simulation PC, which seamlessly integrates into the dSPACE real-time system, is used for this purpose.

Generating the Test Scenarios

The most important part of testing autonomous vehicles is generating suitable scenarios that can be used to reliably test and validate functions for autonomous driving. The Scenario Editor is used for this purpose. It can create complex surrounding traffic using convenient graphical methods. These scenarios consist of the maneuvers of an ego-vehicle (the vehicle to be tested including its sensors), the maneuvers of the surrounding traffic, and the infrastructure (roads, traffic signs, roadside structures, etc.). This creates a virtual, realistic 3-D world that is captured by the vehicle sensors. The flexible settings allow for a variety of tests, ranging from the exact implementation of standardized Euro NCAP specifications to individually structured, complex scenarios in urban areas. The 3-D environment, including the 3-D objects of the vehicles as well as the sensor environment models, is simulated in real time using ASM. The trajectories of the road users are simulated using the ASM Traffic Model.

The two HIL racks contain all components for sensor simulation:

SCALEXIO real-time platform, Sensor Simulation PCs, and ESI Units.

The ZF ProAI control unit to be tested is located in the left-hand rack.

The simulator for the actuators is not shown.

Components of Sensor Simulation

The real-time sensor environment simulation is implemented using the following components:

ZF ProAI

The ZF ProAI control unit provides high computing power and artificial intelligence (AI) for functions that enable automated driving. It uses an extremely powerful and scalable NVIDIA platform to process signals from camera, lidar, radar, and ultrasound sensors. It understands what is happening around the vehicle in real time and gathers experience through deep learning.

Benefits

- AI-capable

- Computing power of up to 150 TeraOPS (= 150 trillion computing operations per second), depending on the model

- Ready for functions that enable automated and autonomous driving

- Highly scalable interfaces and functions

Validating the Autonomous Vehicle

The HIL simulator network presented here helps developers analyze the overall vehicle behavior of the virtualized technology platform under conditions that are fundamental for further development. This includes scenarios in test areas where the first vehicles delivered have to find their way completely autonomously. The simulator tests the vehicles’ safe driving capabilities even when unforeseeable events occur in rain, snow, or on black ice, to name a few examples. Other typical HIL test methods are added, such as genera-ting faults in the E/E system, i.e., broken wires, short circuits, or bus system errors. These comprehensive, continuously expanding test catalogs ensure that the safety-critical autonomous system is efficiently validated in terms of functionality.

The HIL simulator synchronously generates the surrounding environment of the radar, lidar, and camera sensors, including their front end, in real time and subsequently provides it to the ZF ProAI control unit. ZF ProAI controls the simulated actuators according to the driving strategy.

Deep Learning

ZF engineers use the simulator to ’train’ the vehicle in various driving func-tions. Training focuses particularly on urban traffic situations: for example, interaction with pedestrians and groups of pedestrians at crosswalks, collision assessment as well as behavior at traffic lights and in roundabouts. In contrast to highway or country road driving, establishing a thorough understanding of the current traffic situation that provides the basis for appropriate actions of a computer-controlled vehicle is much more difficult in urban areas.

At a Glance

The Task

- Validating an autonomous, battery electric technology platform

- Testing the AI-based vehicle guidance

The Challenge

- Emulating all sensors in real time

- Setting up a sensor-realistic real-time simulation of the sensor environment (3-D environment)

- Simulating the complete vehicle behavior in realistic traffic

The Solution

- Setting up a real-time platform for the high-precision simulation of radar, lidar, and camera sensors

- Simulating traffic, vehicle dynamics and electric drives in real time

- Conducting tests using easily adjustable scenarios in a virtual 3-D environment

About the author:

Oliver Maschmann

Oliver Maschmann is project manager at ZF in Friedrichshafen and responsible for the setup and operation of the HIL test benches for full vehicle integration testing.