How can you solve the particularly challenging tasks of autonomous driving in the processing chain from perception and situation analysis to behavior planning with artificial intelligence? The tech company Bosch shows how to efficiently train neural networks with annotated sensor data from understand.ai.

New innovative concepts for mobility, such as highly automated or autonomous driving, place enormous demands on the safety and reliability of technical systems. The efficient development of particularly reliable control systems for autonomous driving up to SAE Level 5 also requires the use of suitable technologies. Conventional control-based approaches therefore compete with the capabilities of trained neural networks. When executed on fast graphics processing units (GPUs), neural networks are particularly suitable for processing the vast amounts of data from high-resolution sensors.

Identifying AI Application Areas

In this context, the first step is to identify potential application areas for artificial intelligence (AI) along the entire processing chain from perception to situation analysis and behavior planning. In addition, particularly promising methods from the field of machine learning must be evaluated. This is the starting point of a project in which Bosch investigates both AI application areas and learning methods with a focus on multimodal perception, i.e., the environment perception of a vehicle with the merged data of video, radar, and lidar sensors.

Setting up a High-Diversity Data Set

The raw data of the sensors installed in the vehicle, which is recorded during real drives, is used as training data. The traffic environments used for the recordings must be very diverse (highways, country roads, urban areas, traffic objects, traffic scenarios, etc.). This is achieved in particular by defining the ideal types and characteristics of routes as well as route categories. For the real drives, tracks that match the definitions and cover all defined categories are then selected.

Training Neural Networks Using Supervised Learning

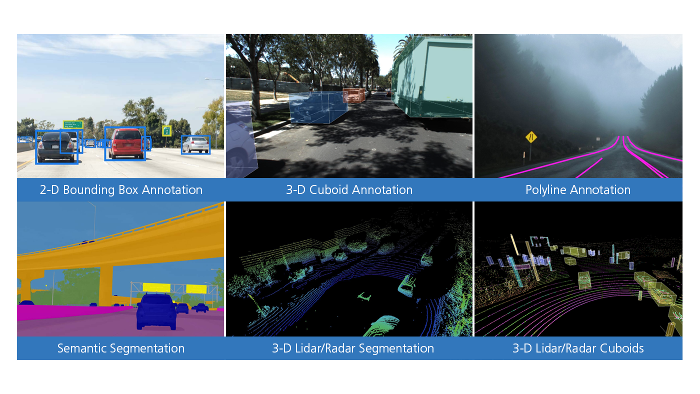

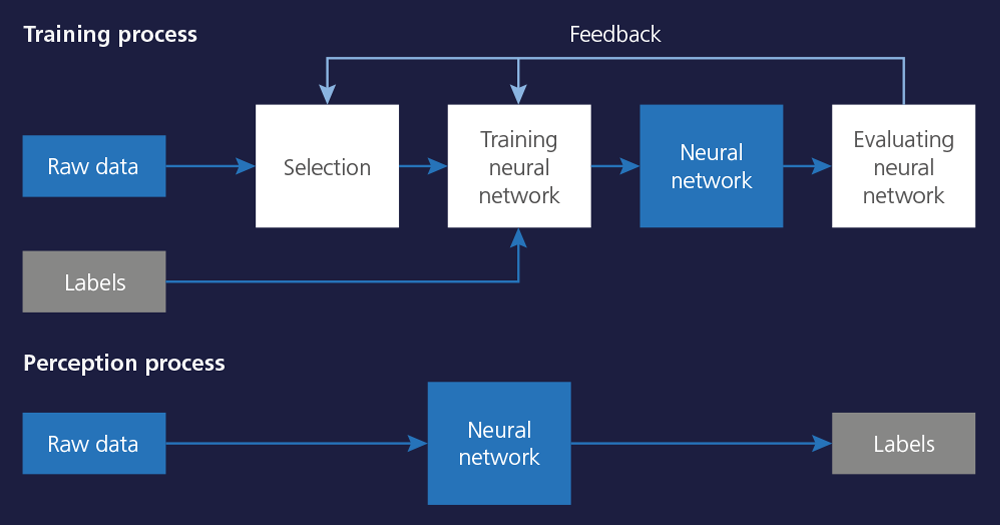

Like the human brain, neural networks learn by means of positive and negative examples: The paths to correct results are maintained, the ones to incorrect results are discarded. Both tasks and solutions are required to identify correct results. In the context of systems for autonomous driving, these tasks and solutions are typically the raw sensor data and the detected objects, respectively. This approach is called supervised learning. The solutions are made available in a previous step by markings (labels/annotations) in the form of reference data (raw data plus label/annotation).

Learning Material for Neural Networks

Successful (machine) learning requires the use of high-quality learning material. Therefore, relevant objects that AI has to recognize by itself later (pixel patterns etc.) must be precisely marked and classified in the data. Since this step entails very high, partly manual efforts, the anonymized data was transferred to the service provider understand.ai, a dSPACE group company that specializes in labeling automation. Bosch and understand.ai agreed on precise quality goals to ensure successful training.

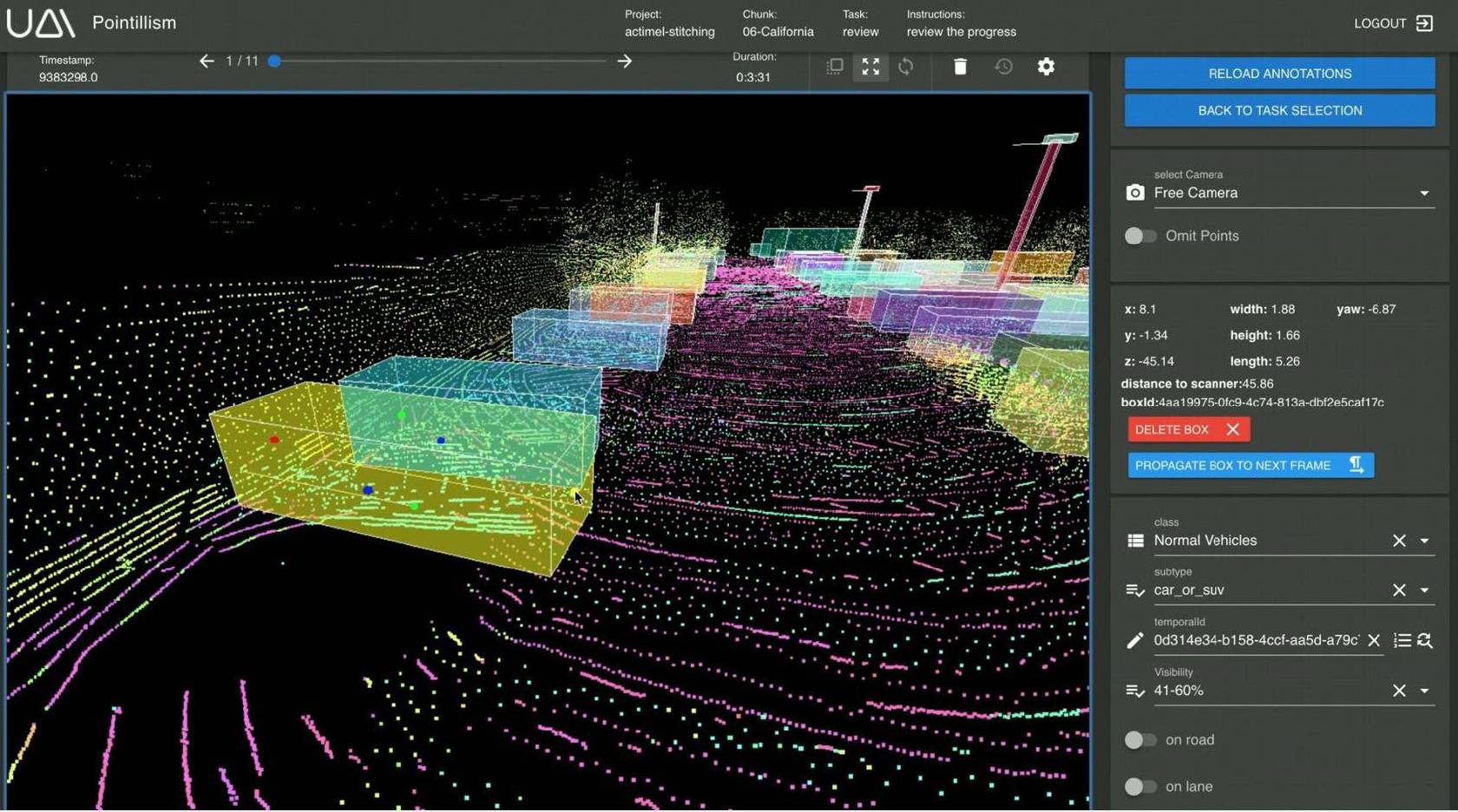

3-D Annotation of Lidar Data

Annotation was performed with data from lidar sensors. The objects were marked with high-precision bounding boxes placed in the 3-D point clouds of the lidar. The camera sensor data was used for plausibility checks. An iterative approach has proven successful for achieving the defined quality goals. In this approach, interim results were reviewed and discussed in feedback loops with understand.ai. The feedback loops and continuous requirement refinement helped ensure the required level of quality early and continuously.

Special Challenges During Annotation

Annotation poses special challenges, such as differentiating between cars and vans or detecting vehicles with roof boxes or bicycle racks. Expert knowledge and powerful tools make the difference in these complex tasks. With its web- and AI-based tools for object detection and prediction, understand.ai was able to identify boundary cases and show sophisticated solutions.

Supervised Learning with Annotated Sensor Data

The annotated data is used to identify potential application areas for AI. Networks are trained for specific applications and their behavior and performance are evaluated. Depending on the depth of the network and the amount of data, training can take several days or weeks. A prerequisite for successful training processes is a coordinated IT infrastructure equipped with powerful GPU-based computer clusters.

Results and Accuracy

Highly accurate annotation is an indispensable prerequisite for supervised learning, because the quality of the reference data determines the subsequent ability of the AI to clearly identify objects. understand.ai achieved the expected and specified quality of the annotation. However, it must be said that while annotation can be good for tasks of this complexity, it will not be perfect. As in other areas of development, annotation is subject to a continuous learning process in which processes and tools are continuously adapted and optimized in order to achieve the highest possible quality. Established processes, powerful tools, and efficient feedback cycles lead to the desired results. The experience and expertise of recognized annotation specialists is invaluable for an economical and efficient approach.

Outlook: Annotating Surround-View Data

A new measurement campaign is planned for 360° environment detection, in which the vehicle environment is recorded in high resolution with camera, lidar, and radar sensors. This creates new challenges in terms of data volume, synchronous processing, and annotation of the merged data. The subsequent steps for this project is currently being discussed by the experts from understand.ai and Bosch.

About the authors:

Dr. Claudius Gläser

BOSCH Corporate Research

Dr. Florian Faion

BOSCH Corporate Research