The development of autonomous vehicles usually focuses on the transport of people and goods. The Korean Institute of Industrial Technology (KITECH) now uses an autonomous street cleaning machine to demonstrate that autonomous driving plays an important role outside the transport sector as well.

Using dSPACE technology, such as the scenario generation service, the simulation solution AURELION, and the Automotive Simulation Models (ASM), KITECH successfully set up a test system that can validate the software of an autonomous street sweeper for use in its future area of deployment. The team used existing measurement data, among others, to generate realistic scenarios.

Real Streets as a Virtual Representation

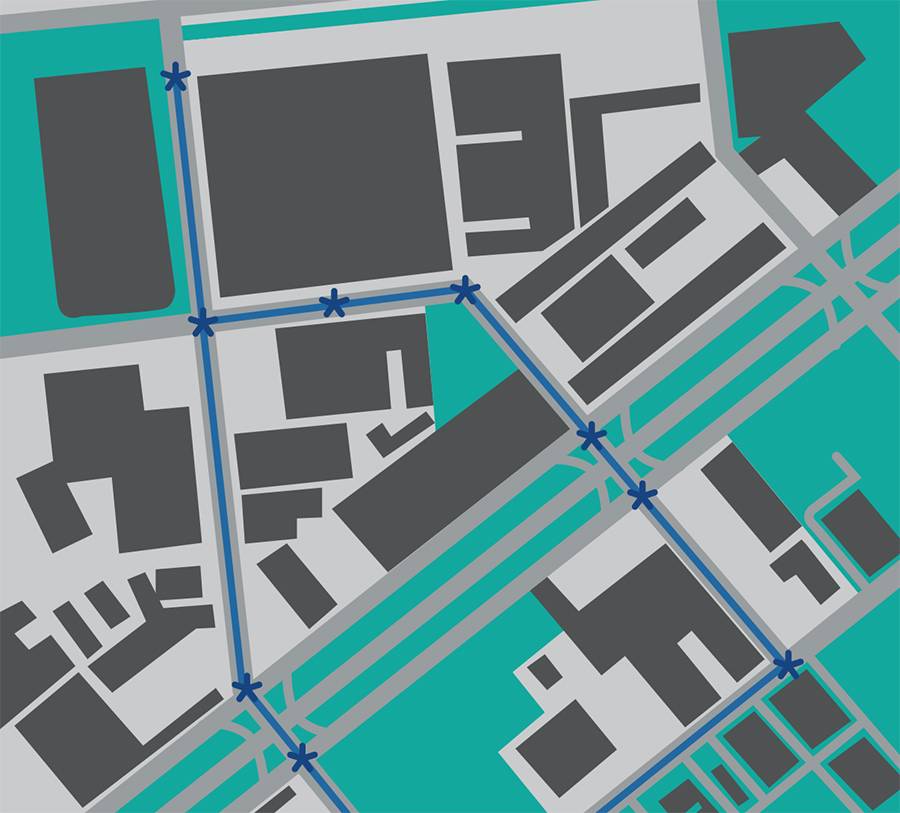

KITECH used measurement data recorded by vehicle sensors to rebuild a 2.3 km track consisting of roads of the South Korean city of Pyeongdong, in a virtual 3-D environment. For this area of deployment (Figure 1), sensor-realistic test scenarios for the operation of the street sweeper are generated and then run through. The implementation of such a project requires solutions that dSPACE has focused on as a partner for simulation and validation. This means the dSPACE tool chain was able to provide vital support throughout the entire process.

Safety-Critical Scenarios Included

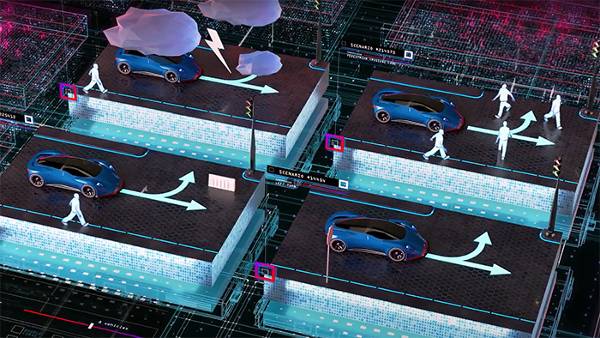

The aim of this simulation is to validate a range of scenarios that can occur while an autonomous street sweeper is on the road. This includes critical scenarios as well as everyday situations, such as turning vehicles. The project also focuses on the verification of the localization, the planning logic, the trajectory recording, and algorithms for speed control. This is particularly important for algorithms that play a role in safety-critical driving scenarios and are therefore difficult to verify in real test drives.

From Street to Simulation

Test drives in computer-generated scenarios are indispensable in making the vision of driverless street sweepers a reality. To make the scenarios as realistic as possible, the models and data used have to replicate the real world as precisely as possible. As this replication is used for sensor simulation in the test system, precision is of utmost importance especially for autonomous vehicles, which find their way around a virtual map using their sensors.

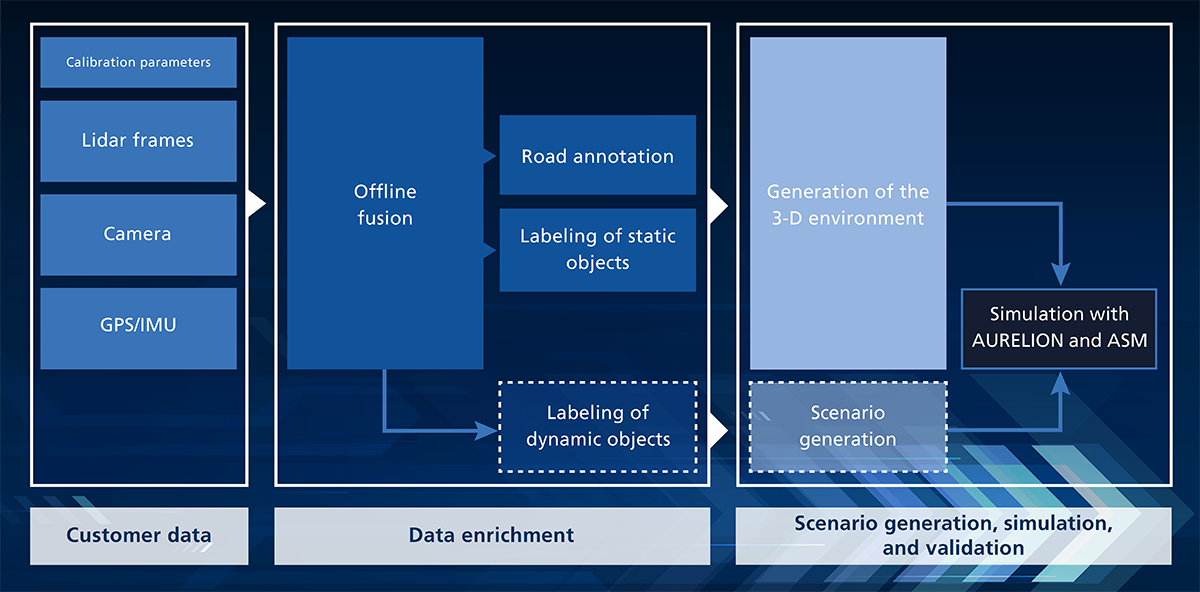

Creating Simulation Scenarios – Almost Automatically

A highly automated process (Figure 2) uses the available vehicle sensor data to create the artifacts (simulation scenarios) required for simulation. First, the available measurement data is placed in a global reference system in terms of time and space. In a second step, relevant information is extracted using AI-based annotation processes. For this, the annotation tool of the dSPACE company understand.ai generates the required annotations. In a third step, the extracted information is translated into simulation-capable scenarios. Each simulation is based on an accurate road model. This project uses the road model of a 2.3-kilometer track. It contains ten intersections and all road markings that can be detected by the camera.

Sophisticated Environment Model

In addition to the road model, the scenario also includes a three-dimensional environment model that contains all static objects in the vicinity of the route. It contains 70 buildings, 45 fences, walls, traffic signs, traffic lights, streetlights, and trees. Since the environment model is used for a physics-based lidar simulation, the materials of the objects are simulated as realistically as possible. The simulation scenarios are complemented by dynamic objects in the form of other road users. Their type, looks, and behavior are defined randomly on the basis of simple behavior models. It is also possible to extract traffic scenarios from the measurement data and replay them in the simulation.

Validation in the Virtual Environment

The different layers of the driving function can now be tested in the newly created virtual world. Among other things, this includes perception algorithms for vehicle and traffic light detection that are based on a convolutional neural network (CNN); obstacle detection by filtering for Voxel grids and bounding boxes; and HD-map-based trajectory planning. In the simulation, the relevant sensor data for all sensors (7 cameras, 4 lidars, 4 radars, V2X, vehicle status information, and GPS) are created with a high degree of realism. The virtual vehicle now has to follow the planned trajectory and adjust its speed to the obstacle and environment information it receives. By varying parameters such as weather and traffic density, it is possible to generate any number of different scenarios and run through them thousands of times. This makes it possible to detect critical situations before the prototype undertakes its first real drive in Pyeongdong.

Wongun Kim, Ph.D., KITECH

dSPACE MAGAZINE, PUBLISHED DECEMBER 2022

About KITECH

The Korean Institute of Industrial Techology (KITECH) is a research institute specialized in technology transfer and technology commercialization. It was established in 1989 and aims to provide support for the industrial world, especially for production-oriented small and medium enterprises (SMEs).

About the author:

Wongun Kim, Ph.D.

is Principal Researcher for the Smart Agricultural Machinery R&D Group, Jeonbuk Division, at KITECH, Republic of Korea.